¶ Access Conditions

Requirements:

- Account on the e-science.pl platform

- Active service "Process on Supercomputer"

¶ Console Access

¶ Check if you have GPU access

Log in to the access server:

ssh user@ui.wcss.pl

Check the service balance:

service-balance --check-gpu

Example output indicating GPU access:

GPU AVAILABLE FOR: hpc-xxxxx-123456789

If you do not meet the above requirements, click here.

¶ Interactive shell Job

Run an interactive job with a GPU accelerator:

sub-interactive-lem-gpu

List the available GPUs:

nvidia-smi

Output:

Wed Apr 2 12:41:26 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.124.06 Driver Version: 570.124.06 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA H100 Off | 00000000:66:00.0 Off | 0 |

| N/A 33C P0 69W / 700W | 1MiB / 95830MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

¶ PyTorch in a Container

¶ In an Interactive Job

- Log in to ui.wcss.pl:

ssh username@ui.wcss.pl

- Start an interactive job:

srun -N 1 -c 4 --mem=10gb -t60 -p H100 --gres=gpu:hopper:1 --pty /bin/bash

- Run a container with the

nvidia-smicommand:

apptainer exec --nv /lustre/software-data/container-images/pytorch_latest.sif nvidia-smi

¶ In a Batch Job

- Log in to ui.wcss.pl:

ssh username@ui.wcss.pl

- Create a file

test-job.sh:

#!/bin/bash

#SBATCH -N 1

#SBATCH -c 4

#SBATCH --mem=10gb

#SBATCH --time=0-01:00:00

#SBATCH --job-name=example

#SBATCH -p lem-gpu

#SBATCH --gres=gpu:hopper:1

apptainer exec --nv /lustre/software-data/container-images/pytorch_latest.sif obliczenia_skrypt.py

- Submit the batch job and read the output file:

sbatch test-job.sh

¶ Scientific software

¶ Subscripts

Specially prepared scripts to run individual versions of a given program on the GPU resources

List of subscripts

Enter the name of the subscript and click the Enter button - you will get information about the parameters.

Example of the script for MATLAB version r2022b, sub-matlab-R2022b:

login@ui: ~ $ sub-matlab-R2022b

Usage: /usr/local/bin/bem2/sub-matlab-R2022b [PARAMETERS] INPUT_FILE MOLECULE_FILE

Parameters:

-p | --partition PARTITION Set partition (queue). Default = normal

-n | --nodes NODES Set number of nodes. Default = 1

-c | --cores CORES Up to 48. Default = 1

-m | --memory MEMORY In GB, up to 390 (must be integer value). Default = 1

-t | --time TIME_LIMIT In hours. Default = 1

Run the script by selecting the lem-gpu partition - a sample script:

sub-matlab-R2022b -p lem-gpu -n 1 -c 1 -m 3 -t 50

¶ Modules

¶ Graphical Access

¶ JupyterLab with PyTorch

- Log in to https://ood.e-science.pl

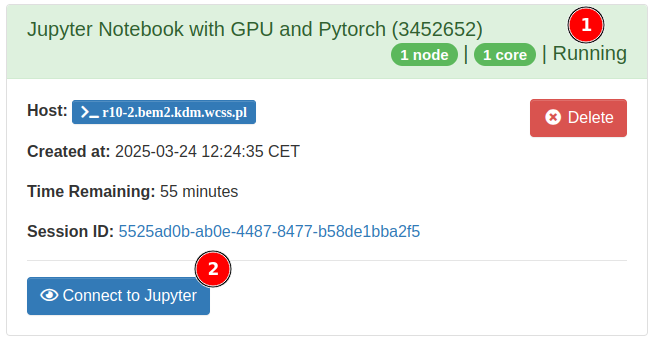

- Select the application

Jupyter Notebook with GPU and PyTorch:

- Click

Launch - Wait until the job status changes to

Running(1) and clickConnect to Jupyter(2)

A new window will open in the browser with the JupyterLab interface.

¶ I have a problem with...

- No e-science account If you do not have an e-science account, register.

- No "Process on a Supercomputer" service Submit a request for a new service or modification of an existing one.

- No GPU access within an active service Submit a request to increase resources for the selected service.

- GPU hours have run out in the service Submit a request to increase resources.

¶ See also

- Who can become a Supercomputer user?

- How to gain access to the Supercomputer?

- Service Requests Instructions on how to fill out online forms

- users.e-science.pl Manage teams, users, and access rights

- OpenOnDemand Graphical access to applications on the Supercomputer

- Resource usage register How to check available resources in a service

- HPC info Detailed information about resource usage in jobs and services

- set-default-service Change the default service when submitting jobs

- Sub-scripts Specially prepared scripts for launching specific versions of a given program

- Modules Use the module mechanism

The full version of the user documentation is available here.

If you do not find a solution in the above documentation, please contact kdm@wcss.pl.

or 71 320 47 45